Onboarding with Your Docker Image

Suppose you have read this section: Create Model Card with YAML File .

Create Model Card with Existed Docker Image

In your config.yaml file, you can specify the inference_image_name field.

inference_image_name: $your_image_name

Also, you should specify enable_custom_image to true in the config.yaml file.

enable_custom_image: true

Then, for the bootstrap key, you can write some command that install the requirements.

bootstrap: |

echo "Installing dependency..."

Finally, for the job key, you can write some command that start the inference service.

job: |

python3 main_entry.py

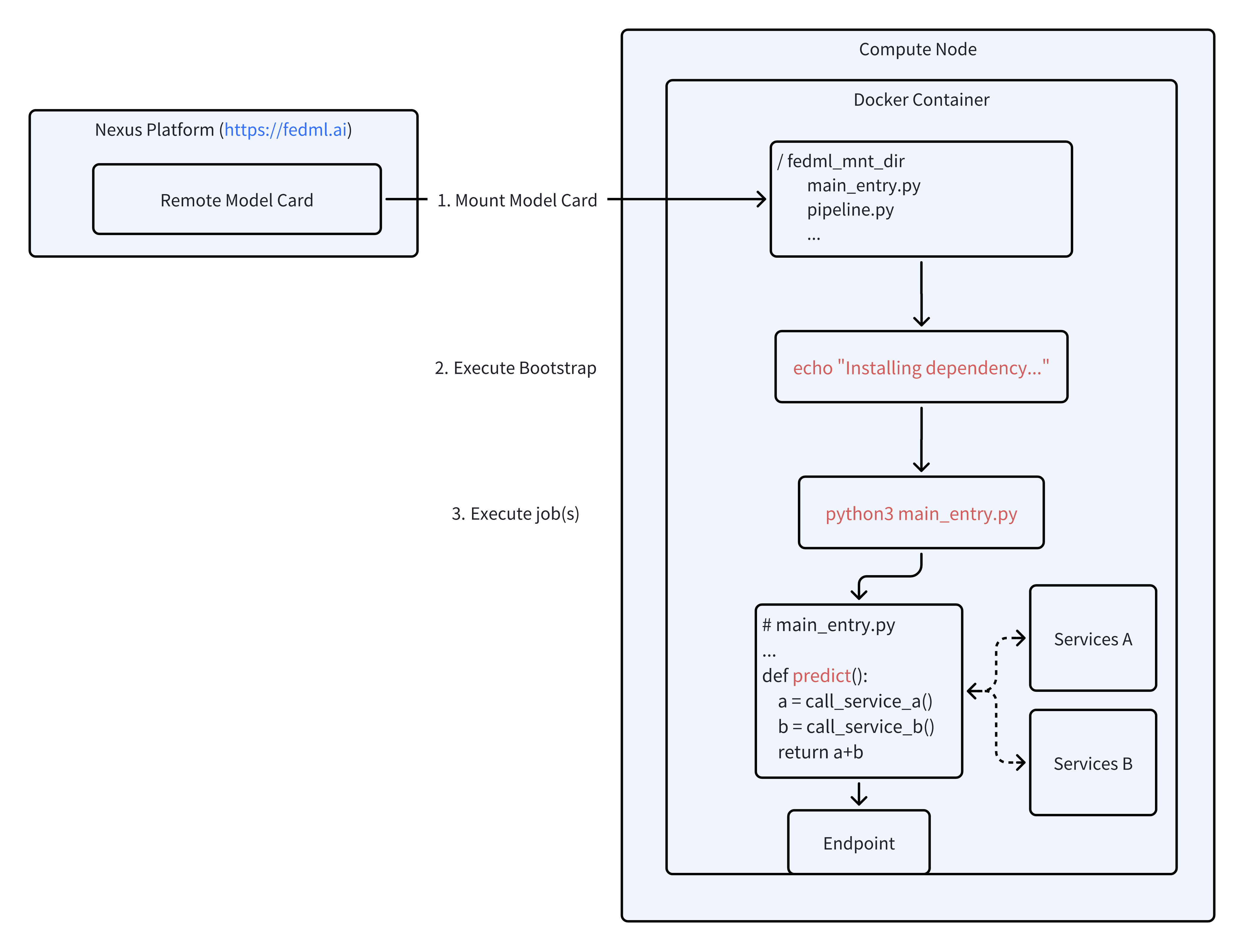

A graph of the procedure that TensorOpera Library will internally do when you deploy this model card is shown below.